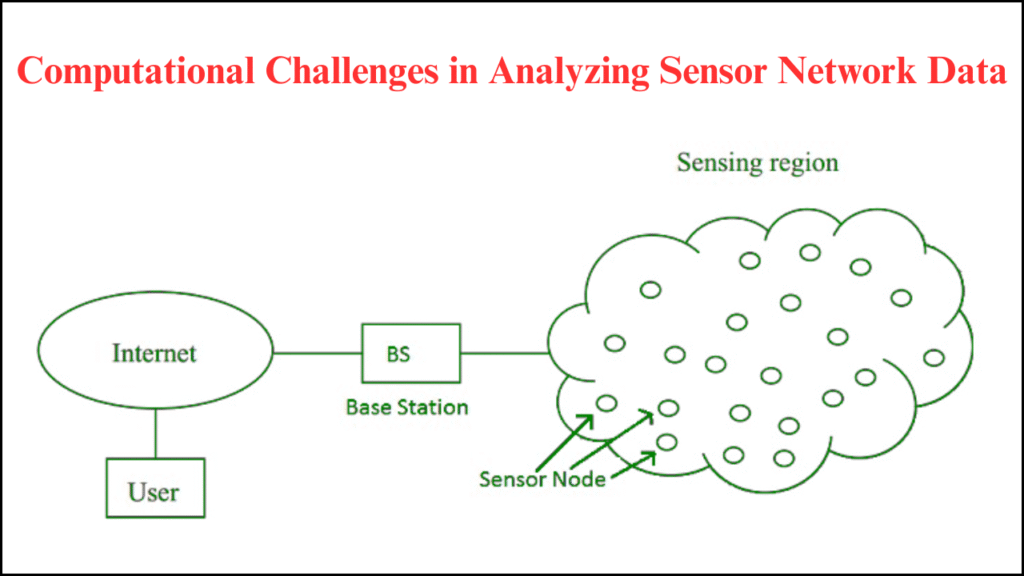

Sensor networks form the backbone of modern digital ecosystems, powering applications in smart cities, healthcare, agriculture, disaster management, and industrial automation. Massive streams of data generated from distributed sensors provide rich opportunities for insights, but they also introduce significant computational challenges. Data from sensors is often dynamic, heterogeneous, and noisy, demanding sophisticated methods for storage, transmission, and analysis. This article explores the computational challenges in analyzing sensor network data, presenting them in structured points and tables for clarity.

Table of Contents

Key Characteristics of Sensor Network Data

- High Volume: Sensor nodes continuously generate large datasets, making storage and processing demanding.

- Real-Time Nature: Many applications, such as healthcare monitoring or disaster alerts, require instant analysis.

- Distributed Sources: Data originates from multiple spatially spread nodes, increasing complexity in synchronization.

- Heterogeneity: Sensors differ in precision, frequency, and format, leading to integration challenges.

- Energy Constraints: Sensor nodes often operate on limited power, affecting computation and transmission.

- Noisy Inputs: Data may include errors due to environmental interference or hardware limitations.

Major Computational Challenges in Sensor Data Analysis

1. Data Volume and Scalability

- Huge streams of data require scalable computational infrastructure.

- Traditional centralized processing struggles with handling continuous inflows.

- Distributed computing frameworks like Hadoop or Spark are often employed, but integrating them with sensor networks poses efficiency issues.

2. Real-Time Data Processing

- Delays in analysis reduce the effectiveness of applications like traffic monitoring or medical alerts.

- Algorithms must be lightweight yet accurate to balance latency and precision.

- Edge computing is often introduced, but ensuring computational power at edge nodes remains a hurdle.

3. Energy Efficiency

- Sensor nodes with limited battery power cannot support intensive computation or frequent communication.

- Algorithms must minimize processing complexity and communication cost.

- The trade-off between energy saving and the accuracy of results remains a persistent issue.

4. Data Integration and Heterogeneity

- Multiple sensors produce diverse data types such as audio, video, temperature, or pressure.

- Standardizing and fusing heterogeneous data requires advanced preprocessing.

- Semantic interoperability across platforms is difficult to achieve.

5. Fault Tolerance and Reliability

- Sensor failures or communication drops lead to incomplete datasets.

- Computational models must include redundancy and error-correction mechanisms.

- Distinguishing between faulty sensor data and actual anomalies is often challenging.

6. Noise and Data Quality Issues

- Environmental interference creates noisy readings.

- Outliers distort machine learning models if not filtered properly.

- Real-time denoising algorithms increase computational load.

7. Security and Privacy in Computation

- Sensitive sensor data, particularly in healthcare or surveillance, must remain protected.

- Encryption adds computational overhead and latency.

- Distributed nature of sensor networks makes them prone to cyberattacks.

8. Storage and Transmission Bottlenecks

- Continuous inflows exceed traditional storage capacities.

- Compression reduces size but may affect quality.

- Transmission consumes bandwidth and power, requiring optimization.

9. Machine Learning and AI Integration

- Applying AI models to sensor data demands high computational resources.

- Training deep learning models on limited-energy devices is impractical.

- Transfer learning and model compression are strategies, but their deployment is still evolving.

10. Synchronization of Distributed Data

- Time-stamped data from geographically distributed sensors requires precise synchronization.

- Clock drift and network latency complicate alignment.

- Inaccurate synchronization results in flawed analysis outcomes.

Computational Challenges and Their Implications

| Challenge | Description | Implications |

|---|---|---|

| Data Volume | Large datasets from continuous sensor readings | High storage cost, need for distributed processing |

| Real-Time Processing | Instant analysis required for safety-critical applications | Delay affects system responsiveness |

| Energy Constraints | Limited battery life restricts computational capacity | Need for lightweight algorithms, trade-offs with accuracy |

| Heterogeneous Data | Different formats and modalities from diverse sensors | Complexity in fusion, standardization, and semantic integration |

| Fault Tolerance | Node failures or data losses during collection | Requires error correction and redundancy |

| Noise & Quality Issues | Environmental interference and hardware limitations | Necessitates advanced filtering and cleaning |

| Security & Privacy | Sensitive data needs protection during computation | Encryption increases computational load |

| Storage & Transmission | Large data flow strains memory and bandwidth | Compression, selective storage, and optimized routing are required |

| AI Integration | Use of machine learning models for predictive analytics | Requires powerful hardware or optimized lightweight models |

| Synchronization | Aligning distributed time-stamped data | Errors lead to inconsistent analysis results |

Techniques to Address Computational Challenges

1. Edge and Fog Computing

- Placing computation closer to the source reduces latency.

- Fog nodes balance workload between cloud and sensors.

- Efficient for time-sensitive applications like autonomous driving.

2. Data Compression and Aggregation

- Compressing raw data before transmission conserves storage and bandwidth.

- Aggregation techniques reduce redundancy by summarizing local sensor readings.

- Smart algorithms ensure minimal loss of critical information.

3. Lightweight Algorithms

- Algorithms must be optimized to run on constrained devices.

- Approximation methods sacrifice precision for faster and energy-efficient computation.

- Model pruning and quantization help in deploying AI on low-power nodes.

4. Machine Learning for Data Cleaning

- Adaptive filters identify and remove noisy inputs.

- Outlier detection methods improve quality before final analysis.

- Incremental learning ensures adaptation to new patterns in data streams.

5. Redundancy and Fault Management

- Multiple sensors covering the same parameter provide fault tolerance.

- Algorithms detect discrepancies to identify faulty sensors.

- Recovery protocols maintain system stability during failures.

6. Secure Computing Frameworks

- Encryption ensures the confidentiality of sensitive sensor data.

- Blockchain enhances trust in distributed networks.

- Homomorphic encryption allows computation on encrypted data but increases computational demand.

7. Synchronization Protocols

- Time synchronization protocols like TPSN and FTSP reduce drift.

- Hybrid methods combine hardware-based and software-based synchronization.

- Accurate synchronization improves reliability in event detection.

Solutions to Computational Challenges

| Challenge | Proposed Solutions |

|---|---|

| Data Volume | Distributed frameworks, cloud integration, and data summarization |

| Real-Time Processing | Edge computing, fog computing, streaming analytics |

| Energy Constraints | Lightweight algorithms, duty cycling, energy harvesting techniques |

| Heterogeneous Data | Middleware solutions, data fusion frameworks, semantic ontologies |

| Fault Tolerance | Redundant sensing, error correction codes, anomaly detection |

| Noise & Quality Issues | Adaptive filtering, statistical denoising, incremental learning |

| Security & Privacy | End-to-end encryption, blockchain, secure multi-party computation |

| Storage & Transmission | Compression, selective storage policies, bandwidth-efficient routing |

| AI Integration | Model compression, federated learning, transfer learning |

| Synchronization | Middleware solutions, data fusion frameworks, and semantic ontologies |

Emerging Trends in Computational Approaches

- Federated Learning: Enables distributed model training without transferring raw data.

- Quantum Computing: Promises faster optimization for complex data analysis.

- Self-Adaptive Networks: Networks that autonomously adjust computational strategies based on context.

- Energy Harvesting: Use of renewable energy sources to support computational loads in remote sensors.

- Hybrid Architectures: A Combination of cloud, fog, and edge computing for optimized performance.

Summing Up

Sensor network data holds immense innovation potential, but computational challenges make analysis complex and resource-intensive. Data volume, heterogeneity, real-time requirements, and energy constraints create bottlenecks that require advanced solutions. Emerging technologies like edge computing, federated learning, and secure computation frameworks are gradually addressing these obstacles. A balanced approach that integrates lightweight algorithms, efficient synchronization, and robust security measures will ensure that sensor networks achieve their promise in diverse domains while remaining computationally feasible.